I almost called this "Why you should always remember that games are illusions", but that sounded way too pretentious and, honestly, kind of sad.

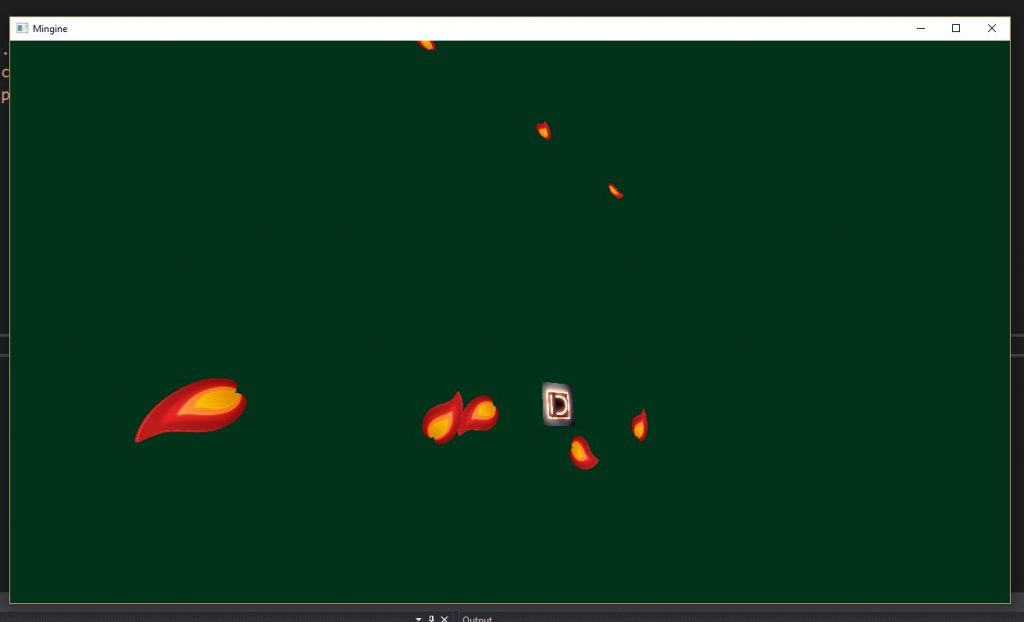

This weekend I participated Global Game Jam 2017, wherein the theme was "Waves". Our game, Bermuda Dieangle, involved controlling the ocean surface to make boats crash into each other. Initially, we planned on running a complex wave simulation to make the boat and water physics extremely realistic and even had code in place to approximate it (one iteration on the CPU running on a background thread which ended up being too slow, and another using the GPU to propagate the wave forces, which we never got to work quite right). But in the end, given our relatively limited experience in physical simulation and extremely limited time, we decided to fake it. Without going into too much detail, each wave was essentially represented by a sphere which grew until it had no more energy. Each boat checked if it was within the sphere of influence of any of these waves and, if so, applied a force away from the wave's origin. Since there were so few waves per frame, this was really cheap to compute and easy to write. For the visualization, we took this data and generated a heightmap of the ocean surface by rendering each wave to a render texture as the sine of (the distance from the center / the current radius of the wave) + time + a random value unique to each wave (specifically, we had an orthographic camera pointing down towards the fluid surface and drew a radial texture with extra math being done in the fragment shader for each wave). This was also extremely cheap and ended up looking really, really good (especially for the low-poly aesthetic we were trying to achieve). Not only that, we were able to implement it in a couple of hours, compared to the 10+ hours we spent trying to figure out how to run a more physically accurate fluid simulation which never actually worked. Furthermore, it should theoretically run on mobile or the web, as opposed to either of the more complex solutions where that would have been totally impractical. And since all of the computations are pretty simple, buoyancy becomes feasible without copying any data from the GPU to the CPU because you only have to sample at a few points per ship/per wave (even though our buoyancy was broken at the end, it should be pretty simple to get in and fix).

So in short, don't bother computing anything that you don't have to. And for the love of all that is holy, if you're a bunch of college kids with very little experience in wave dynamics and not enough time to do thorough research, don't try to write a real-time wave simulation/propagation system in a hackathon where that's only a small part of the project. Just don't.